Some bravo or inspiring research works on the topic of curriculum learning.

A curated list of awesome Curriculum Learning resources. Inspired by awesome-deep-vision, awesome-adversarial-machine-learning, awesome-deep-learning-papers, and awesome-architecture-search

Self-Supervised Learning has become an exciting direction in AI community.

- Bengio: "..." (ICML 2009)

Biological inspired learning scheme.

- Learn the concepts by increasing complexity, in order to allow learner to exploit previously learned concepts and thus ease the abstraction of new ones.

Please help contribute this list by contacting me or add pull request

Markdown format:

- Paper Name.

[[pdf]](link) [[code]](link)

- Key Contribution(s)

- Author 1, Author 2, and Author 3. *Conference Year*| Tag | Det |

Seg |

Cls |

Trans |

Gen |

RL |

Other |

|---|---|---|---|---|---|---|---|

| Item | Detection | Semantic | Classification | Transfer Learning | Generation | Reinforcement Learning | others |

| Issues (e.g.,) | long-tail | imbalance | imbalance, noise label | long-tail, domain-shift | mode collapose | exploit V.S. explore | - |

- Curriculum Learning: A Survey. arxiv 2101.10382 [pdf]

- Curriculum Learning.

[ICML]

- "Rank the weights of the training examples, and use the rank to guide the order of presentation of examples to the learner."

- Learning the Easy Things First: Self-Paced Visual Category Discovery. [CVPR]

- Easy Samples First: Self-paced Reranking for Zero-Example Multimedia Search. [ACM MM]

-

Self-paced Curriculum Learning. [AAAI]

-

Curriculum Learning of Multiple Tasks. [CVPR]

-

A Self-paced Multiple-instance Learning Framework for Co-saliency Detection. [ICCV]

- Multi-modal Curriculum Learning for Semi-supervised Image Classification. [TIP]

-

Self-Paced Learning: An Implicit Regularization Perspective. [AAAI]

-

SPFTN: A Self-Paced Fine-Tuning Network for Segmenting Objects in Weakly Labelled Videos. [CVPR] [code]

-

Curriculum Domain Adaptation for Semantic Segmentation of Urban Scenes. [ICCV] [code]

-

Active Self-Paced Learning for Cost-Effective and Progressive Face Identification. [TPAMI] [code]

-

Co-saliency detection via a self-paced multiple-instance learning framework. [TPAMI]

-

A Self-Paced Regularization Framework for Multi-Label Learning. [TNNLS]

-

Reverse Curriculum Generation for Reinforcement Learning. [CoRL]

-

Curriculum Learning by Transfer Learning: Theory and Experiments with Deep Networks. [ICML]

- "Sort the training examples based on the performance of a pre-trained network on a larger dataset, and then finetune the model to the dataset at hand."

-

MentorNet: Learning Data-Driven Curriculum for Very Deep Neural Networks. [ICML] [code]

-

CurriculumNet: Weakly Supervised Learning from Large-Scale Web Images. [ECCV] [code]

-

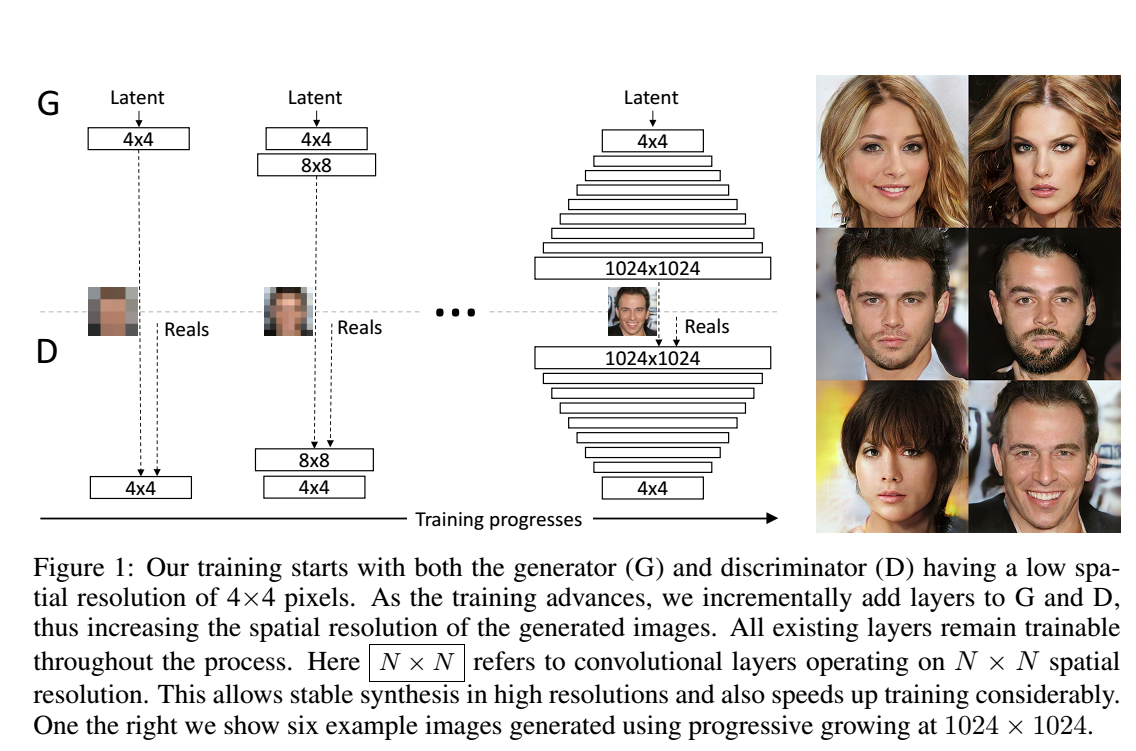

Progressive Growing of GANs for Improved Quality, Stability, and Variation.

Gen[ICLR] [code]- "The key idea is to grow both the generator and discriminator progressively: starting from a low resolution, we add new layers that model increasingly fine details as training progresses. This both speeds the training up and greatly stabilizes it, allowing us to produce images of unprecedented quality."

-

Minimax curriculum learning: Machine teaching with desirable difficulties and scheduled diversity. [ICLR]

-

Learning to Teach with Dynamic Loss Functions. [NeurIPS]

- "A good teacher not only provides his/her students with qualified teaching materials (e.g., textbooks), but also sets up appropriate learning objectives (e.g., course projects and exams) considering different situations of a student."

-

Self-Paced Deep Learning for Weakly Supervised Object Detection. [TPAMI]

-

Unsupervised Feature Selection by Self-Paced Learning Regularization. [Pattern Recognition Letters]

-

Transferable Curriculum for Weakly-Supervised Domain Adaptation. [AAAI] [code]

-

Balanced Self-Paced Learning for Generative Adversarial Clustering Network. [CVPR]

-

Local to Global Learning: Gradually Adding Classes for Training Deep Neural Networks. [CVPR] [code]

-

Dynamic Curriculum Learning for Imbalanced Data Classification. [ICCV] [simple demo]

-

Guided Curriculum Model Adaptation and Uncertainty-Aware Evaluation for Semantic Nighttime Image Segmentation. [ICCV] [code]

-

On The Power of Curriculum Learning in Training Deep Networks. [pdf] ICML

-

Data Parameters: A New Family of Parameters for Learning a Differentiable Curriculum. [NeurIPS] [code]

-Leveraging prior-knowledge for weakly supervised object detection under a collaborative self-paced curriculum learning framework. [IJCV]

- Curriculum Model Adaptation with Synthetic and Real Data for Semantic Foggy Scene Understanding. [IJCV]

- BBN: Bilateral-Branch Network with Cumulative Learning for Long-Tailed Visual Recognition. [CVPR] [code]

-

Curricularface: adaptive curriculum learning loss for deep face recognition. [CVPR] [code]

- "our CurricularFace adaptively adjusts the relative importance of easy and hard samples during different training stages. In each stage, different samples are assigned with different importance according to their corresponding difficultness."

-

Curriculum Manager for Source Selection in Multi-Source Domain Adaptation. [ECCV][code]

-

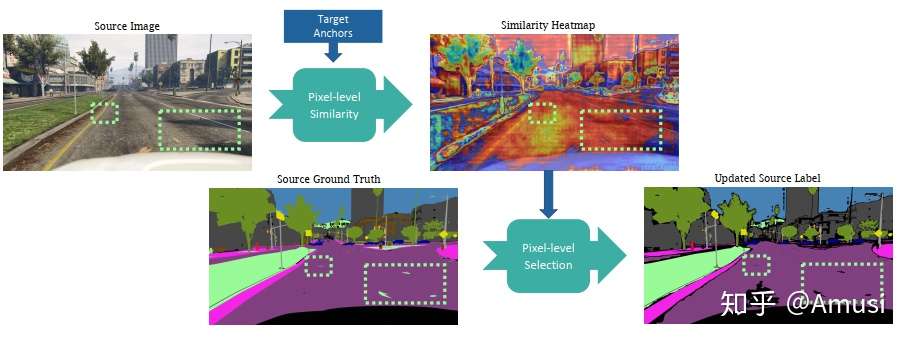

Content-Consistent Matching for Domain Adaptive Semantic Segmentation.

Seg[ECCV] [code]- "to acquire those synthetic images that share similar distribution with the real ones in the target domain, so that the domain gap can be naturally alleviated by employing the content-consistent synthetic images for training."

- "not all the source images could contribute to the improvement of adaptation performance, especially at certain training stages."

-

DA-NAS: Data Adapted Pruning for Efficient Neural Architecture Search. [ECCV]

- "Our method is based on an interesting observation that the learning speed for blocks in deep neural networks is related to the difficulty of recognizing distinct categories. We carefully design a progressive data adapted pruning strategy for efficient architecture search. It will quickly trim low performed blocks on a subset of target dataset (e.g., easy classes), and then gradually find the best blocks on the whole target dataset."

-

Label-similarity Curriculum Learning. [ECCV] [code]

- "The idea is to use a probability distribution over classes as target label, where the class probabilities reflect the similarity to the true class. Gradually, this label representation is shifted towards the standard one-hot-encoding."

-

Multi-Task Curriculum Framework for Open-Set Semi-Supervised Learning. [ECCV] [code]

-

Semi-Supervised Semantic Segmentation via Dynamic Self-Training and Class-Balanced Curriculum. [arXiv] [code]

-

Evolutionary Population Curriculum for Scaling Multi-Agent Reinforcement Learning. [ICLR][code]

- "Evolutionary Population Curriculum (EPC), a curriculum learning paradigm that scales up MultiAgent Reinforcement Learning (MARL) by progressively increasing the population of training agents in a stage-wise manner."

-

Curriculum Loss: Robust Learning and Generalization Against Label Corruption. [ICLR]

-

Automatic Curriculum Learning through Value Disagreement. [NeurIPS]

- " When biological agents learn, there is often an organized and meaningful order to which learning happens."

- "Our key insight is that if we can sample goals at the frontier of the set of goals that an agent is able to reach, it will provide a significantly stronger learning signal compared to randomly sampled goals"

-

Curriculum Learning by Dynamic Instance Hardness. [NeurIPS]

-

Self-paced Contrastive Learning with Hybrid Memory for Domain Adaptive Object Re-ID. [NeurIPS] [code] [zhihu]

-

Self-Paced Deep Reinforcement Learning. [NeurIPS]

-

SuperLoss: A Generic Loss for Robust Curriculum Learning. [NeurIPS] [code]

-

Curriculum Learning for Reinforcement Learning Domains: A Framework and Survey. [JMLR]

-

Curriculum Labeling: Revisiting Pseudo-Labeling for Semi-Supervised Learning. [AAAI] [code]

-

Robust Curriculum Learning: from clean label detection to noisy label self-correction. [ICLR] [online review]

- "Robust curriculum learning (RoCL) improves noisy label learning by periodical transitions from supervised learning of clean labeled data to self-supervision of wrongly-labeled data, where the data are selected according to training dynamics."

-

Robust Early-Learning: Hindering The Memorization of Noisy Labels. [ICLR] [online review]

- "Robust early-learning: to reduce the side effect of noisy labels before early stopping and thus enhance the memorization of clean labels. Specifically, in each iteration, we divide all parameters into the critical and non-critical ones, and then perform different update rules for different types of parameters."

-

When Do Curricula Work? [ICLR (oral)]

- "We find that for standard benchmark datasets, curricula have only marginal benefits, and that randomly ordered samples perform as well or better than curricula and anti-curricula, suggesting that any benefit is entirely due to the dynamic training set size. ... Our experiments demonstrate that curriculum, but not anti-curriculum or random ordering can indeed improve the performance either with limited training time budget or in the existence of noisy data."

-

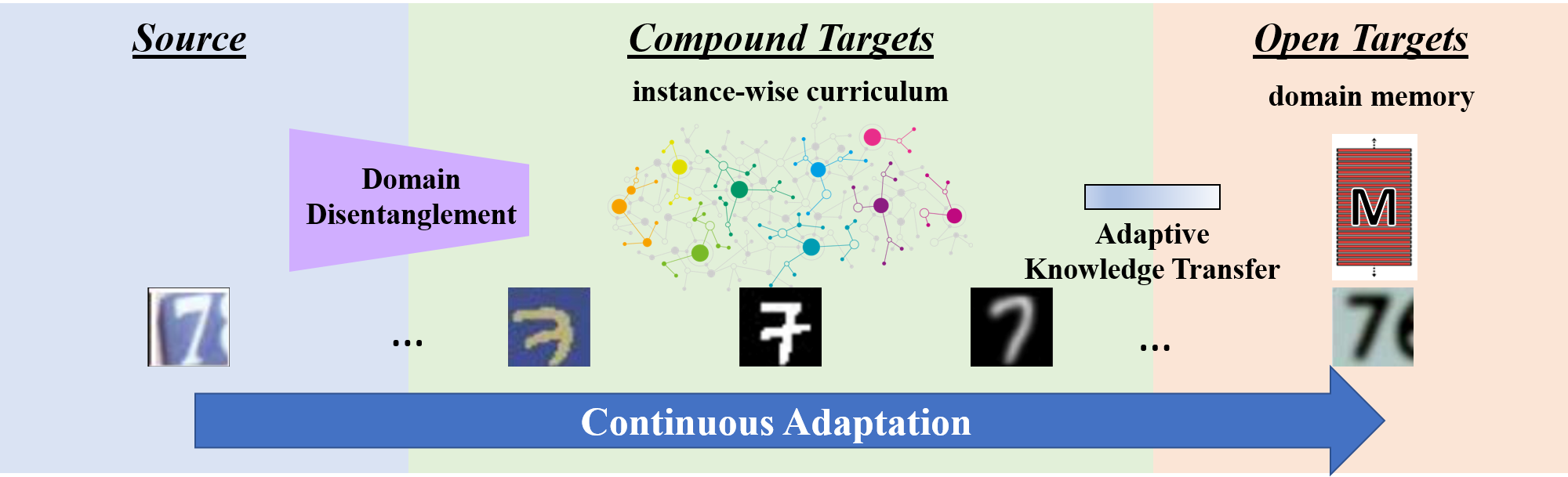

Curriculum Graph Co-Teaching for Multi-Target Domain Adaptation.

TL[CVPR] [code]

-

Unsupervised Curriculum Domain Adaptation for No-Reference Video Quality Assessment.

Cls[ICCV] [code] -

Adaptive Curriculum Learning. [ICCV]

-

Multi-Level Curriculum for Training A Distortion-Aware Barrel Distortion Rectification Model. [ICCV]

-

TeachMyAgent: a Benchmark for Automatic Curriculum Learning in Deep RL. [ICML] [code]

-

Self-Paced Context Evaluation for Contextual Reinforcement Learning.

RL[ICCV]- "To improve sample efficiency for learning on such instances of a problem domain, we present Self-Paced Context Evaluation (SPaCE). Based on self-paced learning, \spc automatically generates \task curricula online with little computational overhead. To this end, SPaCE leverages information contained in state values during training to accelerate and improve training performance as well as generalization capabilities to new instances from the same problem domain."

-

Curriculum Learning by Optimizing Learning Dynamics. [AISTATS] [code]

-

FlexMatch: Boosting Semi-Supervised Learning with Curriculum Pseudo Labeling.

Cls[NeurIPS] [code] -

Learning with Noisy Correspondence for Cross-modal Matching. [NeurIPS] [code]

-

Self-Paced Contrastive Learning for Semi-Supervised Medical Image Segmentation with Meta-labels. [NeurIPS]

- "A self-paced learning strategy exploiting the weak annotations is proposed to further help the learning process and discriminate useful labels from noise."

-

Curriculum Learning for Vision-and-Language Navigation. [NeurIPS]

- "We propose a novel curriculum- based training paradigm for VLN tasks that can balance human prior knowledge and agent learning progress about training samples."

-

Characterizing and overcoming the greedy nature of learning in multi-modal deep neural networks. [ICML] [code]

- "We hypothesize that due to the greedy nature of learning in multi-modal deep neural networks, these models tend to rely on just one modality while under-fitting the other modalities. ... We propose an algorithm to balance the conditional learning speeds between modalities during training and demonstrate that it indeed addresses the issue of greedy learning."

-

Pseudo-Labeled Auto-Curriculum Learning for Semi-Supervised Keypoint Localization.

Seg[ICLR] [open review]- "We propose to automatically select reliable pseudo-labeled samples with a series of dynamic thresholds, which constitutes a learning curriculum."

-

C-Planning: An Automatic Curriculum for Learning Goal-Reaching Tasks.

RL[ICLR] [open review] -

Curriculum learning as a tool to uncover learning principles in the brain. [ICLR] [open review]

-

It Takes Four to Tango: Multiagent Self Play for Automatic Curriculum Generation. [ICLR] [open review]

-

Boosted Curriculum Reinforcement Learning.

RL[ICLR] [open review] -

ST++: Make Self-Training Work Better for Semi-Supervised Semantic Segmentation. [CVPR] [code]

- "propose a selective re-training scheme via prioritizing reliable unlabeled samples to safely exploit the whole unlabeled set in an easy-to-hard curriculum learning manner."

-

Robust Cross-Modal Representation Learning with Progressive Self-Distillation. [CVPR]

- "The learning objective of vision-language approach of CLIP does not effectively account for the noisy many-to-many correspondences found in web-harvested image captioning datasets. To address this challenge, we introduce a novel training framework based on cross-modal contrastive learning that uses progressive self-distillation and soft image-text alignments to more efficiently learn robust representations from noisy data."

-

EAT-C: Environment-Adversarial sub-Task Curriculum for Efficient Reinforcement Learning. [ICML]

-

Curriculum Reinforcement Learning via Constrained Optimal Transport. [ICML] [code]

-

Evolving Curricula with Regret-Based Environment Design. [ICML] [project]

-

Robust Deep Reinforcement Learning through Bootstrapped Opportunistic Curriculum. [ICML] [code]

-

On the Statistical Benefits of Curriculum Learning. [ICML]

-

CLOSE: Curriculum Learning On the Sharing Extent Towards Better One-shot NAS. [ECCV] [code]

- "We propose to apply Curriculum Learning On Sharing Extent (CLOSE) to train the supernet both efficiently and effectively. Specifically, we train the supernet with a large sharing extent (an easier curriculum) at the beginning and gradually decrease the sharing extent of the supernet (a harder curriculum)."

-

Curriculum Learning for Data-Efficient Vision-Language Alignment. [arxiv]