Ruia

Overview

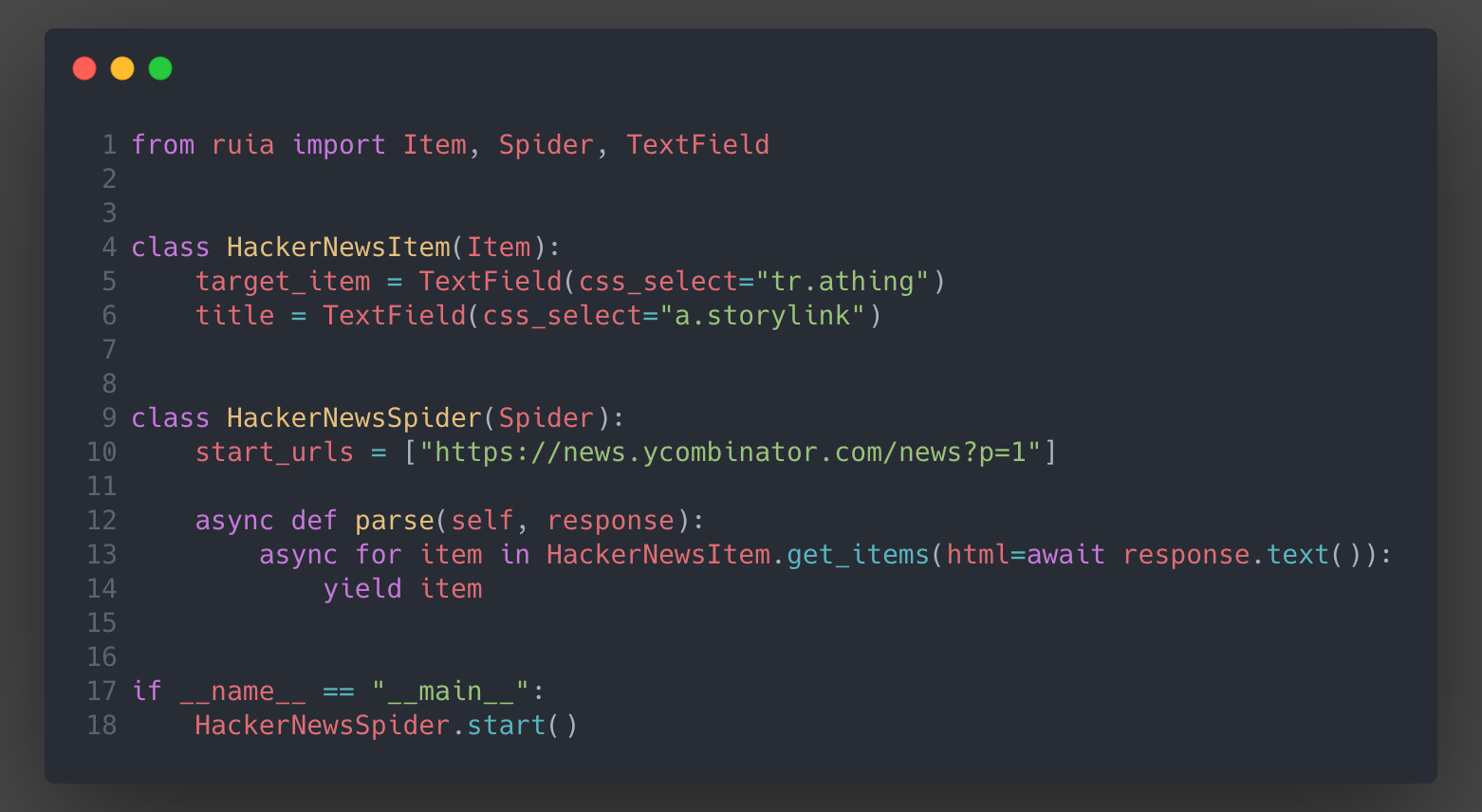

Ruia is an async web scraping micro-framework, written with asyncio and aiohttp, aims to make crawling url as convenient as possible.

Write less, run faster:

- Documentation: 中文文档 |documentation

- Organization: python-ruia

- Plugin: awesome-ruia(Any contributions you make are greatly appreciated!)

Features

- Easy: Declarative programming

- Fast: Powered by asyncio

- Extensible: Middlewares and plugins

- Powerful: JavaScript support

Installation

# For Linux & Mac

pip install -U ruia[uvloop]

# For Windows

pip install -U ruia

# New features

pip install git+https://github.com/howie6879/ruia

Tutorials

- Overview

- Installation

- Define Data Items

- Spider Control

- Request & Response

- Customize Middleware

- Write a Plugins

TODO

- Cache for debug, to decreasing request limitation, ruia-cache

- Provide an easy way to debug the script, ruia-shell

- Distributed crawling/scraping

Contribution

Ruia is still under developing, feel free to open issues and pull requests:

- Report or fix bugs

- Require or publish plugins

- Write or fix documentation

- Add test cases

!!!Notice: We use black to format the code