TTS: Text-to-Speech for all.

TTS is a library for advanced Text-to-Speech generation. It's built on the latest research, was designed to achieve the best trade-off among ease-of-training, speed and quality. TTS comes with pretrained models, tools for measuring dataset quality and already used in 20+ languages for products and research projects.

💬

Where to ask questions

Please use our dedicated channels for questions and discussion. Help is much more valuable if it's shared publicly, so that more people can benefit from it.

| Type | Platforms |

|---|---|

|

|

GitHub Issue Tracker |

|

|

TTS/Wiki |

|

|

GitHub Issue Tracker |

|

|

Discourse Forum |

|

|

Discourse Forum and Matrix Channel |

🔗

Links and Resources

| Type | Links |

|---|---|

|

|

TTS/README.md |

| 👩🏾🏫 Tutorials and Examples | TTS/Wiki |

|

|

TTS/Wiki |

|

|

Repository by @synesthesiam |

|

|

TTS/server |

|

|

TTS/README.md |

|

|

TTS/README.md |

🥇

TTS Performance

"Mozilla*" and "Judy*" are our models. Details...

Features

- High performance Deep Learning models for Text2Speech tasks.

- Text2Spec models (Tacotron, Tacotron2, Glow-TTS, SpeedySpeech).

- Speaker Encoder to compute speaker embeddings efficiently.

- Vocoder models (MelGAN, Multiband-MelGAN, GAN-TTS, ParallelWaveGAN, WaveGrad, WaveRNN)

- Fast and efficient model training.

- Detailed training logs on console and Tensorboard.

- Support for multi-speaker TTS.

- Efficient Multi-GPUs training.

- Ability to convert PyTorch models to Tensorflow 2.0 and TFLite for inference.

- Released models in PyTorch, Tensorflow and TFLite.

- Tools to curate Text2Speech datasets under

dataset_analysis. - Demo server for model testing.

- Notebooks for extensive model benchmarking.

- Modular (but not too much) code base enabling easy testing for new ideas.

Implemented Models

Text-to-Spectrogram

Attention Methods

- Guided Attention: paper

- Forward Backward Decoding: paper

- Graves Attention: paper

- Double Decoder Consistency: blog

Speaker Encoder

Vocoders

- MelGAN: paper

- MultiBandMelGAN: paper

- ParallelWaveGAN: paper

- GAN-TTS discriminators: paper

- WaveRNN: origin

- WaveGrad: paper

You can also help us implement more models. Some TTS related work can be found here.

Install TTS

TTS supports python >= 3.6, <3.9.

If you are only interested in synthesizing speech with the released TTS models, installing from PyPI is the easiest option.

pip install TTS

If you plan to code or train models, clone TTS and install it locally.

git clone https://github.com/mozilla/TTS

pip install -e .

Directory Structure

|- notebooks/ (Jupyter Notebooks for model evaluation, parameter selection and data analysis.)

|- utils/ (common utilities.)

|- TTS

|- bin/ (folder for all the executables.)

|- train*.py (train your target model.)

|- distribute.py (train your TTS model using Multiple GPUs.)

|- compute_statistics.py (compute dataset statistics for normalization.)

|- convert*.py (convert target torch model to TF.)

|- tts/ (text to speech models)

|- layers/ (model layer definitions)

|- models/ (model definitions)

|- tf/ (Tensorflow 2 utilities and model implementations)

|- utils/ (model specific utilities.)

|- speaker_encoder/ (Speaker Encoder models.)

|- (same)

|- vocoder/ (Vocoder models.)

|- (same)

Sample Model Output

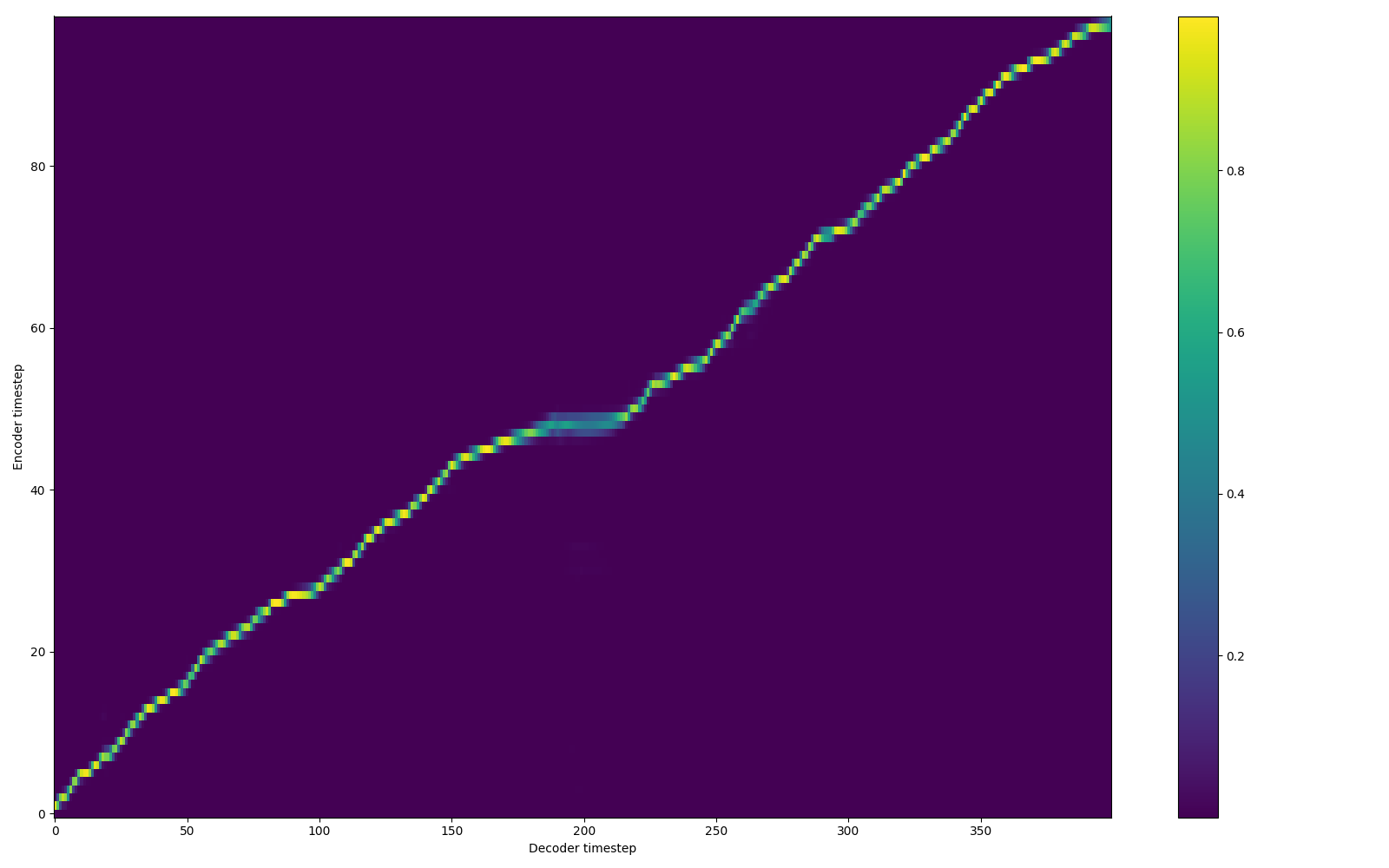

Below you see Tacotron model state after 16K iterations with batch-size 32 with LJSpeech dataset.

"Recent research at Harvard has shown meditating for as little as 8 weeks can actually increase the grey matter in the parts of the brain responsible for emotional regulation and learning."

Audio examples: soundcloud

Datasets and Data-Loading

TTS provides a generic dataloader easy to use for your custom dataset. You just need to write a simple function to format the dataset. Check datasets/preprocess.py to see some examples. After that, you need to set dataset fields in config.json.

Some of the public datasets that we successfully applied TTS:

Example: Synthesizing Speech on Terminal Using the Released Models.

After the installation, TTS provides a CLI interface for synthesizing speech using pre-trained models. You can either use your own model or the release models under the TTS project.

Listing released TTS models.

tts --list_models

Run a tts and a vocoder model from the released model list. (Simply copy and paste the full model names from the list as arguments for the command below.)

tts --text "Text for TTS" \

--model_name "///" \

--vocoder_name "///" \

--out_path folder/to/save/output/

Run your own TTS model (Using Griffin-Lim Vocoder)

tts --text "Text for TTS" \

--model_path path/to/model.pth.tar \

--config_path path/to/config.json \

--out_path output/path/speech.wav

Run your own TTS and Vocoder models

tts --text "Text for TTS" \

--model_path path/to/config.json \

--config_path path/to/model.pth.tar \

--out_path output/path/speech.wav \

--vocoder_path path/to/vocoder.pth.tar \

--vocoder_config_path path/to/vocoder_config.json

Note: You can use ./TTS/bin/synthesize.py if you prefer running tts from the TTS project folder.

Example: Training and Fine-tuning LJ-Speech Dataset

Here you can find a CoLab notebook for a hands-on example, training LJSpeech. Or you can manually follow the guideline below.

To start with, split metadata.csv into train and validation subsets respectively metadata_train.csv and metadata_val.csv. Note that for text-to-speech, validation performance might be misleading since the loss value does not directly measure the voice quality to the human ear and it also does not measure the attention module performance. Therefore, running the model with new sentences and listening to the results is the best way to go.

shuf metadata.csv > metadata_shuf.csv

head -n 12000 metadata_shuf.csv > metadata_train.csv

tail -n 1100 metadata_shuf.csv > metadata_val.csv

To train a new model, you need to define your own config.json to define model details, trainin configuration and more (check the examples). Then call the corressponding train script.

For instance, in order to train a tacotron or tacotron2 model on LJSpeech dataset, follow these steps.

python TTS/bin/train_tacotron.py --config_path TTS/tts/configs/config.json

To fine-tune a model, use --restore_path.

python TTS/bin/train_tacotron.py --config_path TTS/tts/configs/config.json --restore_path /path/to/your/model.pth.tar

To continue an old training run, use --continue_path.

python TTS/bin/train_tacotron.py --continue_path /path/to/your/run_folder/

For multi-GPU training, call distribute.py. It runs any provided train script in multi-GPU setting.

CUDA_VISIBLE_DEVICES="0,1,4" python TTS/bin/distribute.py --script train_tacotron.py --config_path TTS/tts/configs/config.json

Each run creates a new output folder accomodating used config.json, model checkpoints and tensorboard logs.

In case of any error or intercepted execution, if there is no checkpoint yet under the output folder, the whole folder is going to be removed.

You can also enjoy Tensorboard, if you point Tensorboard argument--logdir to the experiment folder.

Contribution Guidelines

This repository is governed by Mozilla's code of conduct and etiquette guidelines. For more details, please read the Mozilla Community Participation Guidelines.

- Create a new branch.

- Implement your changes.

- (if applicable) Add Google Style docstrings.

- (if applicable) Implement a test case under

testsfolder. - (Optional but Prefered) Run tests.

./run_tests.sh

- Run the linter.

pip install pylint cardboardlint

cardboardlinter --refspec master

- Send a PR to

devbranch, explain what the change is about. - Let us discuss until we make it perfect :).

- We merge it to the

devbranch once things look good.

Feel free to ping us at any step you need help using our communication channels.

Collaborative Experimentation Guide

If you like to use TTS to try a new idea and like to share your experiments with the community, we urge you to use the following guideline for a better collaboration. (If you have an idea for better collaboration, let us know)

- Create a new branch.

- Open an issue pointing your branch.

- Explain your idea and experiment.

- Share your results regularly. (Tensorboard log files, audio results, visuals etc.)

Major TODOs

- Implement the model.

- Generate human-like speech on LJSpeech dataset.

- Generate human-like speech on a different dataset (Nancy) (TWEB).

- Train TTS with r=1 successfully.

- Enable process based distributed training. Similar to (https://github.com/fastai/imagenet-fast/).

- Adapting Neural Vocoder. TTS works with WaveRNN and ParallelWaveGAN (https://github.com/erogol/WaveRNN and https://github.com/erogol/ParallelWaveGAN)

- Multi-speaker embedding.

- Model optimization (model export, model pruning etc.)

Acknowledgement

- https://github.com/keithito/tacotron (Dataset pre-processing)

- https://github.com/r9y9/tacotron_pytorch (Initial Tacotron architecture)

- https://github.com/kan-bayashi/ParallelWaveGAN (vocoder library)

- https://github.com/jaywalnut310/glow-tts (Original Glow-TTS implementation)

- https://github.com/fatchord/WaveRNN/ (Original WaveRNN implementation)