Hatchet

Hatchet

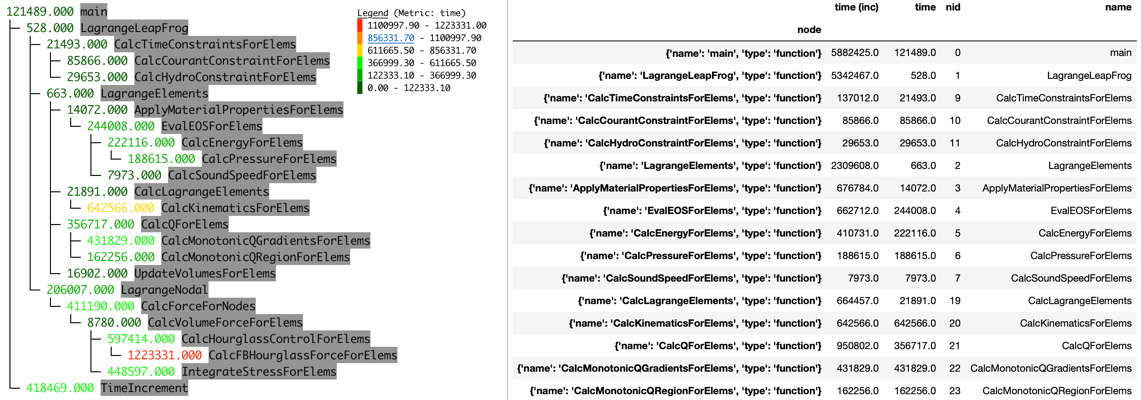

Hatchet is a Python-based library that allows Pandas dataframes to be indexed by structured tree and graph data. It is intended for analyzing performance data that has a hierarchy (for example, serial or parallel profiles that represent calling context trees, call graphs, nested regions’ timers, etc.). Hatchet implements various operations to analyze a single hierarchical data set or compare multiple data sets, and its API facilitates analyzing such data programmatically.

To use hatchet, install it with pip:

$ pip install llnl-hatchet

Or, if you want to develop with this repo directly, run the install script from the root directory, which will build the cython modules and add the cloned directory to your PYTHONPATH:

$ source install.sh

Documentation

See the Getting Started page for basic examples and usage. Full documentation is available in the User Guide.

Examples of performance analysis using hatchet are available here.

Contributing

Hatchet is an open source project. We welcome contributions via pull requests, and questions, feature requests, or bug reports via issues.

Authors

Many thanks go to Hatchet's contributors.

Citing Hatchet

If you are referencing Hatchet in a publication, please cite the following paper:

- Abhinav Bhatele, Stephanie Brink, and Todd Gamblin. Hatchet: Pruning the Overgrowth in Parallel Profiles. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis (SC '19). ACM, New York, NY, USA. DOI

License

Hatchet is distributed under the terms of the MIT license.

All contributions must be made under the MIT license. Copyrights in the Hatchet project are retained by contributors. No copyright assignment is required to contribute to Hatchet.

See LICENSE and NOTICE for details.

SPDX-License-Identifier: MIT

LLNL-CODE-741008