AI4Animation: Deep Learning for Character Control

This project explores the opportunities of deep learning for character animation and control as part of my Ph.D. research at the University of Edinburgh in the School of Informatics, supervised by Taku Komura. Over the last couple years, this project has become a comprehensive framework for data-driven character animation, including data processing, network training and runtime control, developed in Unity3D / Tensorflow / PyTorch. This repository demonstrates using neural networks for animating biped locomotion, quadruped locomotion, and character-scene interactions with objects and the environment, plus sports and fighting games. Further advances on this research will continue being added to this project.

SIGGRAPH 2021

Neural Animation Layering for Synthesizing Martial Arts Movements

Sebastian Starke, Yiwei Zhao, Fabio Zinno, Taku Komura, ACM Trans. Graph. 40, 4, Article 92.

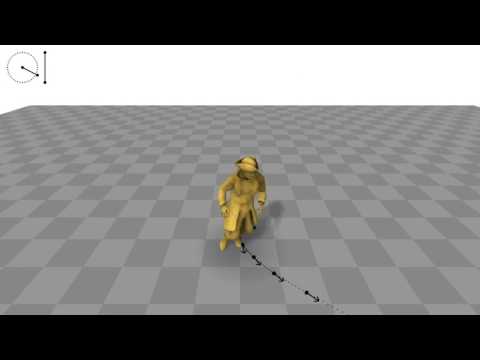

Interactively synthesizing novel combinations and variations of character movements from different motion skills is a key problem in computer animation. In this research, we propose a deep learning framework to produce a large variety of martial arts movements in a controllable manner from raw motion capture data. Our method imitates animation layering using neural networks with the aim to overcome typical challenges when mixing, blending and editing movements from unaligned motion sources. The system can be used for offline and online motion generation alike, provides an intuitive interface to integrate with animator workflows, and is relevant for real-time applications such as computer games.

SIGGRAPH 2020

Local Motion Phases for Learning Multi-Contact Character Movements

Sebastian Starke, Yiwei Zhao, Taku Komura, Kazi Zaman. ACM Trans. Graph. 39, 4, Article 54.

Not sure how to align complex character movements? Tired of phase labeling? Unclear how to squeeze everything into a single phase variable? Don't worry, a solution exists!

Controlling characters to perform a large variety of dynamic, fast-paced and quickly changing movements is a key challenge in character animation. In this research, we present a deep learning framework to interactively synthesize such animations in high quality, both from unstructured motion data and without any manual labeling. We introduce the concept of local motion phases, and show our system being able to produce various motion skills, such as ball dribbling and professional maneuvers in basketball plays, shooting, catching, avoidance, multiple locomotion modes as well as different character and object interactions, all generated under a unified framework.

- Video - Paper - Code - Windows Demo - ReadMe -

SIGGRAPH Asia 2019

Neural State Machine for Character-Scene Interactions

Sebastian Starke+, He Zhang+, Taku Komura, Jun Saito. ACM Trans. Graph. 38, 6, Article 178.

(+Joint First Authors)

Animating characters can be an easy or difficult task - interacting with objects is one of the latter. In this research, we present the Neural State Machine, a data-driven deep learning framework for character-scene interactions. The difficulty in such animations is that they require complex planning of periodic as well as aperiodic movements to complete a given task. Creating them in a production-ready quality is not straightforward and often very time-consuming. Instead, our system can synthesize different movements and scene interactions from motion capture data, and allows the user to seamlessly control the character in real-time from simple control commands. Since our model directly learns from the geometry, the motions can naturally adapt to variations in the scene. We show that our system can generate a large variety of movements, icluding locomotion, sitting on chairs, carrying boxes, opening doors and avoiding obstacles, all from a single model. The model is responsive, compact and scalable, and is the first of such frameworks to handle scene interaction tasks for data-driven character animation.

- Video - Paper - Code & Demo - Mocap Data - ReadMe -

SIGGRAPH 2018

Mode-Adaptive Neural Networks for Quadruped Motion Control

He Zhang+, Sebastian Starke+, Taku Komura, Jun Saito. ACM Trans. Graph. 37, 4, Article 145.

(+Joint First Authors)

Animating characters can be a pain, especially those four-legged monsters! This year, we will be presenting our recent research on quadruped animation and character control at the SIGGRAPH 2018 in Vancouver. The system can produce natural animations from real motion data using a novel neural network architecture, called Mode-Adaptive Neural Networks. Instead of optimising a fixed group of weights, the system learns to dynamically blend a group of weights into a further neural network, based on the current state of the character. That said, the system does not require labels for the phase or locomotion gaits, but can learn from unstructured motion capture data in an end-to-end fashion.

- Video - Paper - Code - Mocap Data - Windows Demo - Linux Demo - Mac Demo - ReadMe -

SIGGRAPH 2017

Phase-Functioned Neural Networks for Character Control

Daniel Holden, Taku Komura, Jun Saito. ACM Trans. Graph. 36, 4, Article 42.

This work continues the recent work on PFNN (Phase-Functioned Neural Networks) for character control. A demo in Unity3D using the original weights for terrain-adaptive locomotion is contained in the Assets/Demo/SIGGRAPH_2017/Original folder. Another demo on flat ground using the Adam character is contained in the Assets/Demo/SIGGRAPH_2017/Adam folder. In order to run them, you need to download the neural network weights from the link provided in the Link.txt file, extract them into the /NN folder, and store the parameters via the custom inspector button.

- Video - Paper - Code (Unity) - Windows Demo - Linux Demo - Mac Demo -

Processing Pipeline

In progress. More information will be added soon.

Copyright Information

This project is only for research or education purposes, and not freely available for commercial use or redistribution. The motion capture data is available only under the terms of the Attribution-NonCommercial 4.0 International (CC BY-NC 4.0) license.