This repository represents Ultralytics open-source research into future object detection methods, and incorporates lessons learned and best practices evolved over thousands of hours of training and evolution on anonymized client datasets. All code and models are under active development, and are subject to modification or deletion without notice. Use at your own risk.

Figure Notes (click to expand)

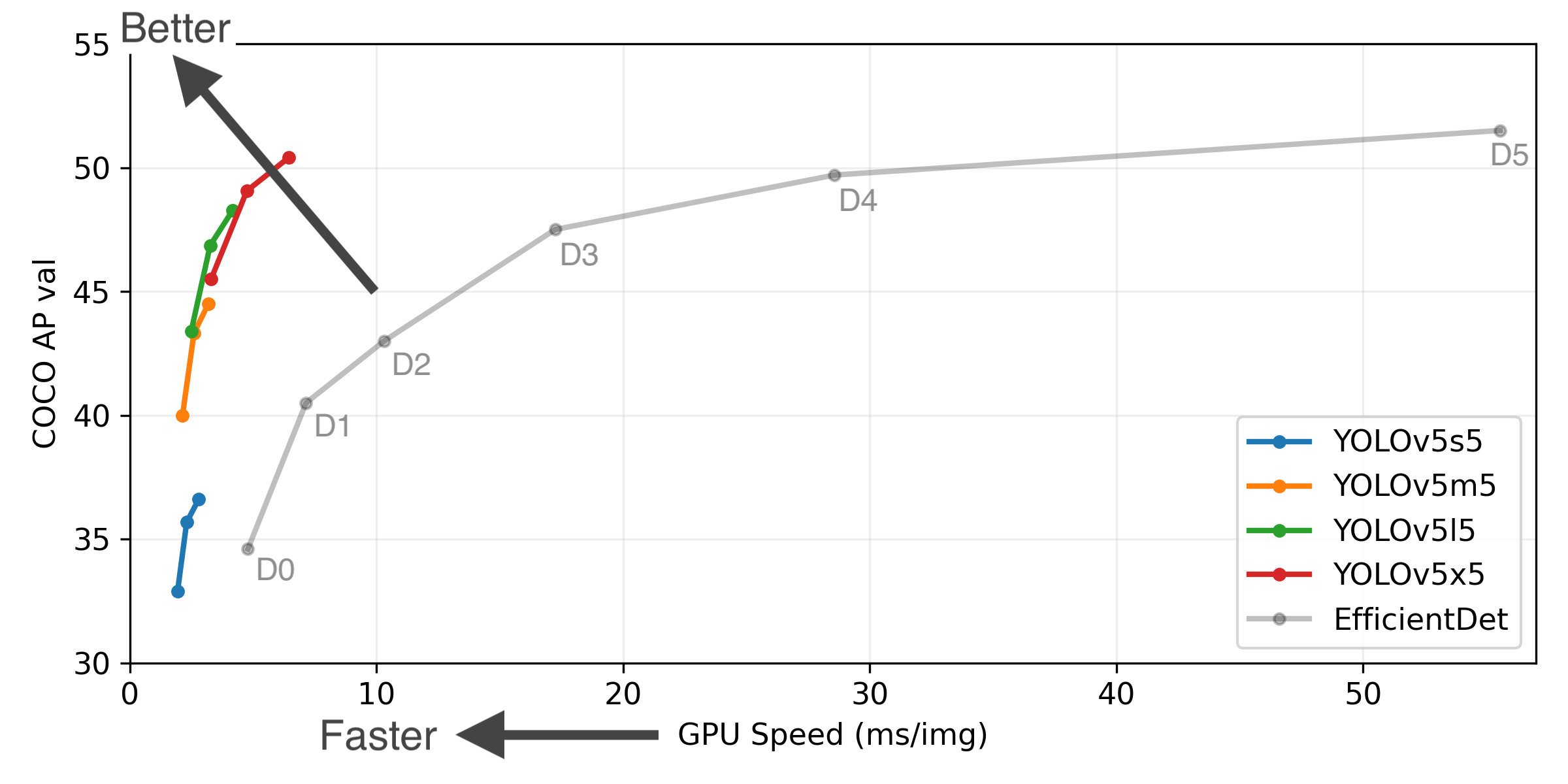

- GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS.

- EfficientDet data from google/automl at batch size 8.

- Reproduce by

python test.py --task study --data coco.yaml --iou 0.7 --weights yolov3.pt yolov3-spp.pt yolov3-tiny.pt yolov5l.pt

Branch Notice

The ultralytics/yolov3 repository is now divided into two branches:

- Master branch: Forward-compatible with all YOLOv5 models and methods (recommended

✅ ).

$ git clone https://github.com/ultralytics/yolov3 # master branch (default)

- Archive branch: Backwards-compatible with original darknet *.cfg models (no longer maintained

⚠️ ).

$ git clone https://github.com/ultralytics/yolov3 -b archive # archive branch

Pretrained Checkpoints

| Model | size (pixels) |

mAPval 0.5:0.95 |

mAPtest 0.5:0.95 |

mAPval 0.5 |

Speed V100 (ms) |

params (M) |

FLOPS 640 (B) |

|

|---|---|---|---|---|---|---|---|---|

| YOLOv3-tiny | 640 | 17.6 | 17.6 | 34.8 | 1.2 | 8.8 | 13.2 | |

| YOLOv3 | 640 | 43.3 | 43.3 | 63.0 | 4.1 | 61.9 | 156.3 | |

| YOLOv3-SPP | 640 | 44.3 | 44.3 | 64.6 | 4.1 | 63.0 | 157.1 | |

| YOLOv5l | 640 | 48.2 | 48.2 | 66.9 | 3.7 | 47.0 | 115.4 |

Table Notes (click to expand)

- APtest denotes COCO test-dev2017 server results, all other AP results denote val2017 accuracy.

- AP values are for single-model single-scale unless otherwise noted. Reproduce mAP by

python test.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65 - SpeedGPU averaged over 5000 COCO val2017 images using a GCP n1-standard-16 V100 instance, and includes FP16 inference, postprocessing and NMS. Reproduce speed by

python test.py --data coco.yaml --img 640 --conf 0.25 --iou 0.45 - All checkpoints are trained to 300 epochs with default settings and hyperparameters (no autoaugmentation).

Requirements

Python 3.8 or later with all requirements.txt dependencies installed, including torch>=1.7. To install run:

$ pip install -r requirements.txt

Tutorials

- Train Custom Data

🚀 RECOMMENDED - Tips for Best Training Results

☘️ RECOMMENDED - Weights & Biases Logging

🌟 NEW - Supervisely Ecosystem

🌟 NEW - Multi-GPU Training

- PyTorch Hub

⭐ NEW - TorchScript, ONNX, CoreML Export

🚀 - Test-Time Augmentation (TTA)

- Model Ensembling

- Model Pruning/Sparsity

- Hyperparameter Evolution

- Transfer Learning with Frozen Layers

⭐ NEW - TensorRT Deployment

Environments

YOLOv3 may be run in any of the following up-to-date verified environments (with all dependencies including CUDA/CUDNN, Python and PyTorch preinstalled):

- Google Colab and Kaggle notebooks with free GPU:

- Google Cloud Deep Learning VM. See GCP Quickstart Guide

- Amazon Deep Learning AMI. See AWS Quickstart Guide

- Docker Image. See Docker Quickstart Guide

Inference

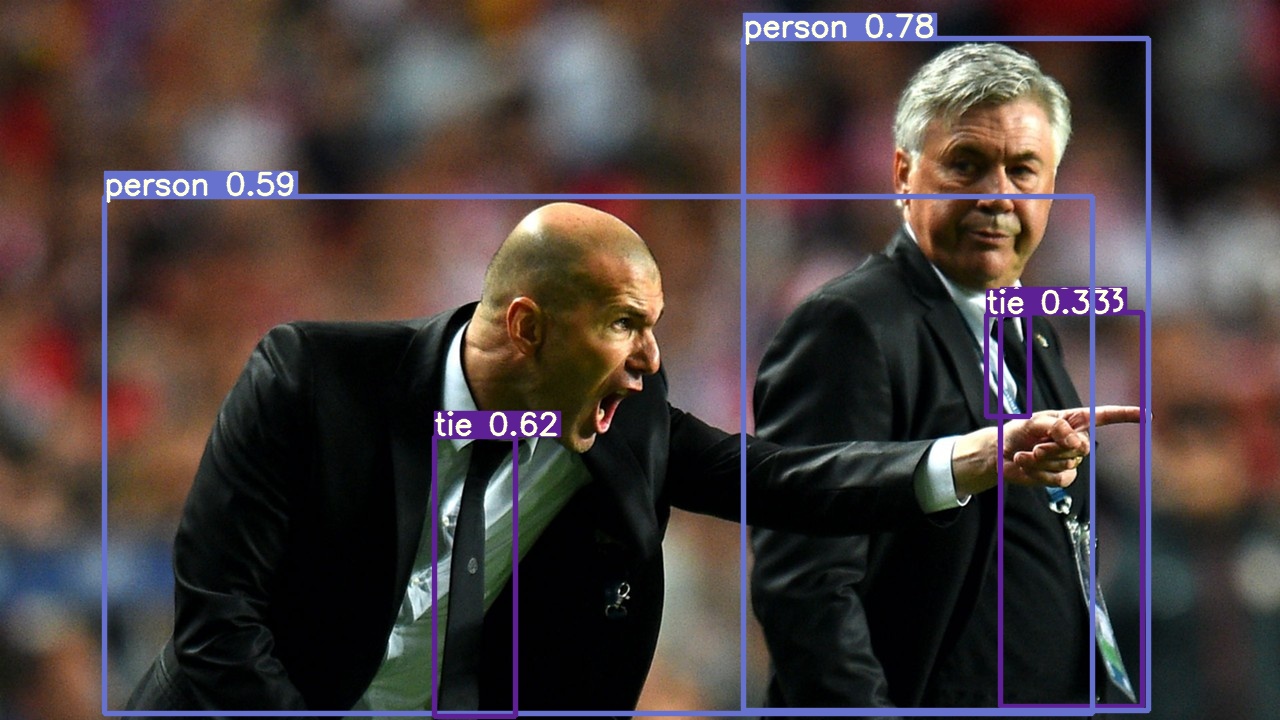

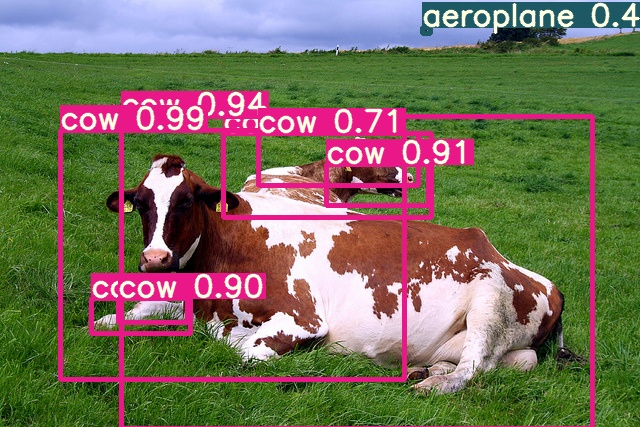

detect.py runs inference on a variety of sources, downloading models automatically from the latest YOLOv3 release and saving results to runs/detect.

$ python detect.py --source 0 # webcam

file.jpg # image

file.mp4 # video

path/ # directory

path/*.jpg # glob

'https://youtu.be/NUsoVlDFqZg' # YouTube video

'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream

To run inference on example images in data/images:

$ python detect.py --source data/images --weights yolov3.pt --conf 0.25

PyTorch Hub

To run batched inference with YOLOv3 and PyTorch Hub:

import torch

# Model

model = torch.hub.load('ultralytics/yolov3', 'yolov3') # or 'yolov3_spp', 'yolov3_tiny'

# Image

img = 'https://ultralytics.com/images/zidane.jpg'

# Inference

results = model(img)

results.print() # or .show(), .save()

Training

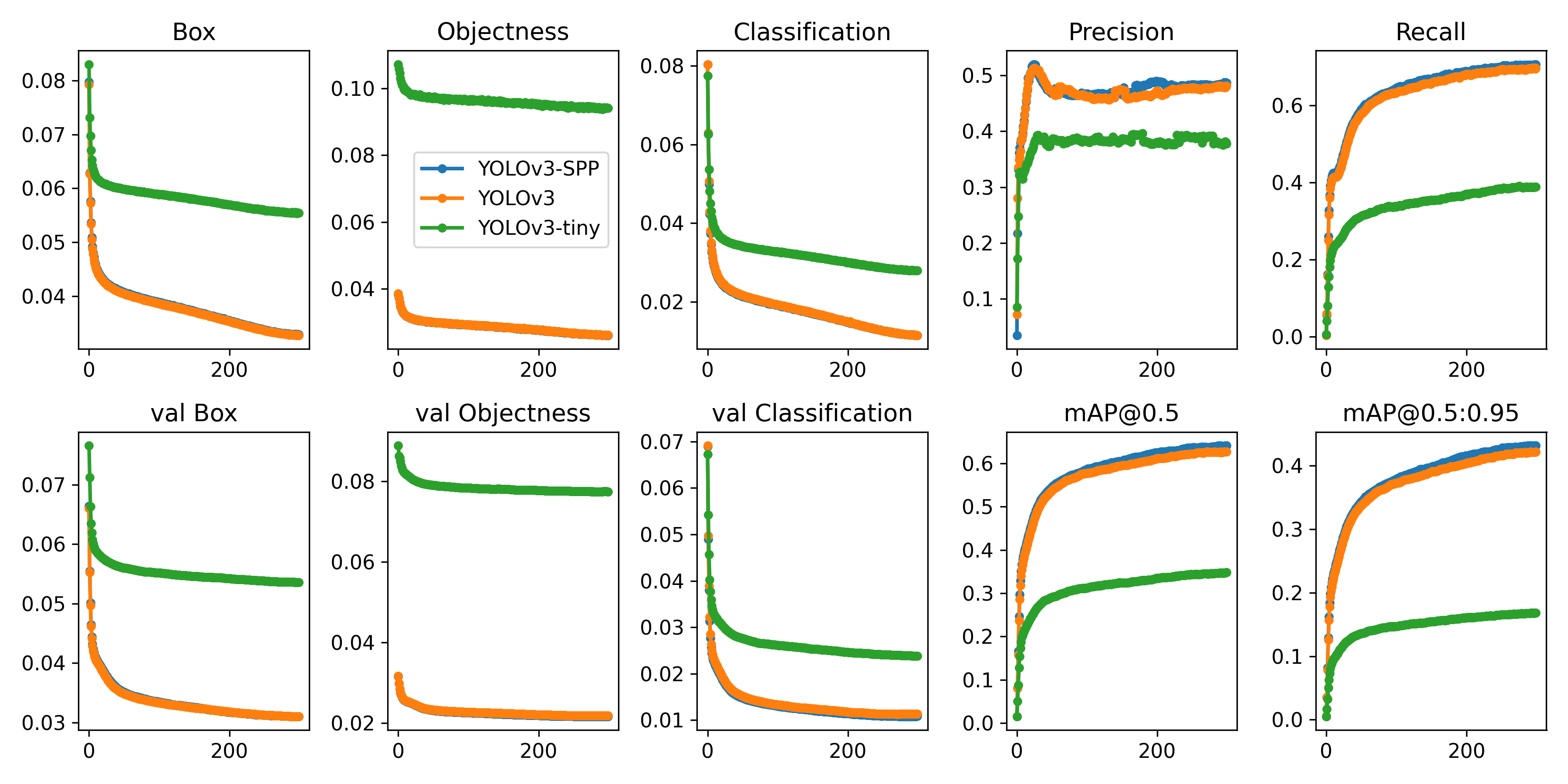

Run commands below to reproduce results on COCO dataset (dataset auto-downloads on first use). Training times for YOLOv3/YOLOv3-SPP/YOLOv3-tiny are 6/6/2 days on a single V100 (multi-GPU times faster). Use the largest --batch-size your GPU allows (batch sizes shown for 16 GB devices).

$ python train.py --data coco.yaml --cfg yolov3.yaml --weights '' --batch-size 24

yolov3-spp.yaml 24

yolov3-tiny.yaml 64

Citation

About Us

Ultralytics is a U.S.-based particle physics and AI startup with over 6 years of expertise supporting government, academic and business clients. We offer a wide range of vision AI services, spanning from simple expert advice up to delivery of fully customized, end-to-end production solutions, including:

- Cloud-based AI systems operating on hundreds of HD video streams in realtime.

- Edge AI integrated into custom iOS and Android apps for realtime 30 FPS video inference.

- Custom data training, hyperparameter evolution, and model exportation to any destination.

For business inquiries and professional support requests please visit us at https://ultralytics.com.

Contact

Issues should be raised directly in the repository. For business inquiries or professional support requests please visit https://ultralytics.com or email Glenn Jocher at [email protected].

if I do not train,the orginal wight can get this result:

if I do not train,the orginal wight can get this result: