🆕

Are you looking for a new YOLOv3 implemented by TF2.0 ?

If you hate the fucking tensorflow1.x very much, no worries! I have implemented a new YOLOv3 repo with TF2.0, and also made a chinese blog on how to implement YOLOv3 object detector from scratch.

code | blog | issue

part 1. Quick start

- Clone this file

$ git clone https://github.com/YunYang1994/tensorflow-yolov3.git

- You are supposed to install some dependencies before getting out hands with these codes.

$ cd tensorflow-yolov3

$ pip install -r ./docs/requirements.txt

- Exporting loaded COCO weights as TF checkpoint(

yolov3_coco.ckpt)【BaiduCloud】

$ cd checkpoint

$ wget https://github.com/YunYang1994/tensorflow-yolov3/releases/download/v1.0/yolov3_coco.tar.gz

$ tar -xvf yolov3_coco.tar.gz

$ cd ..

$ python convert_weight.py

$ python freeze_graph.py

- Then you will get some

.pbfiles in the root path., and run the demo script

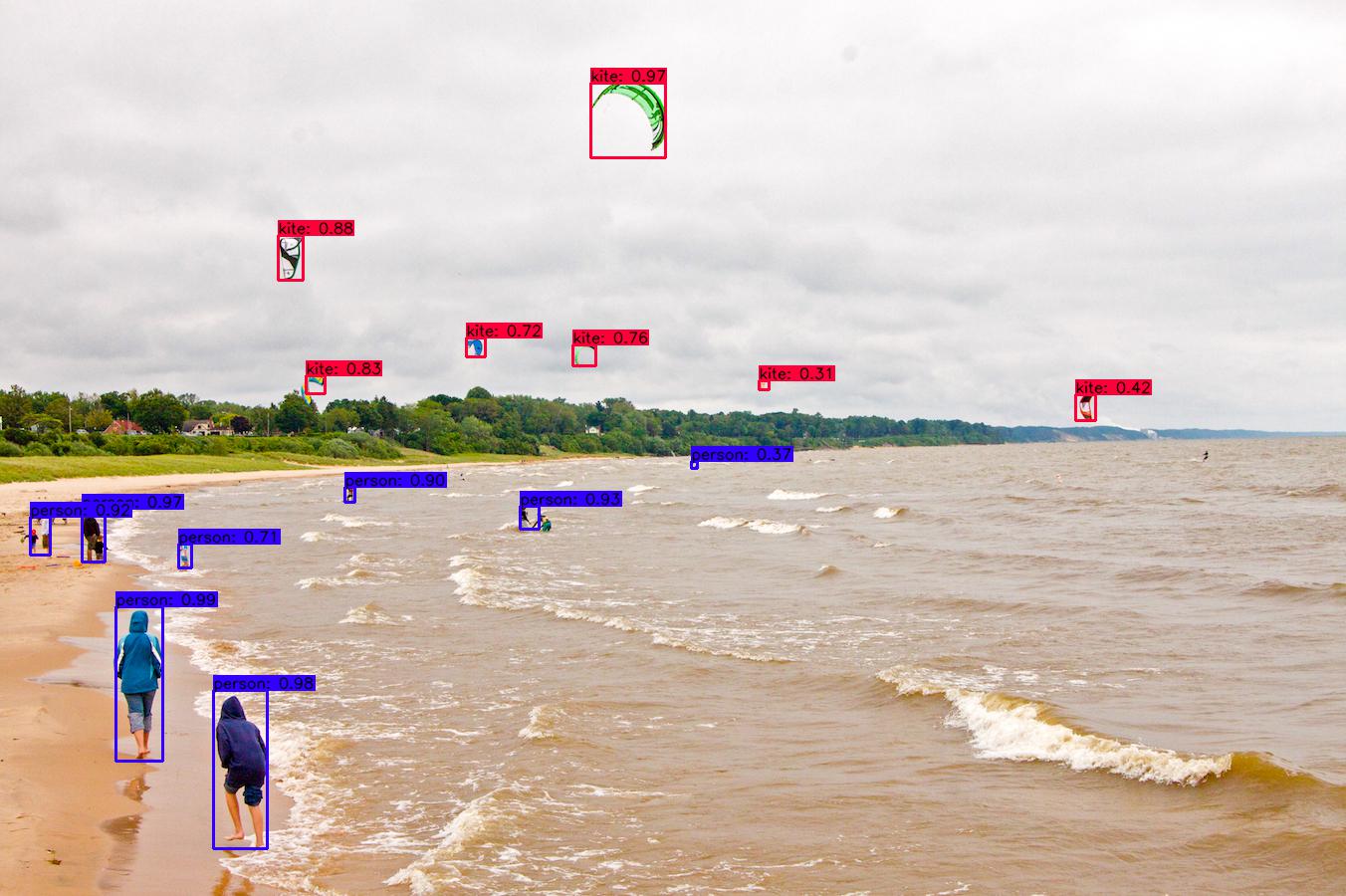

$ python image_demo.py

$ python video_demo.py # if use camera, set video_path = 0

part 2. Train your own dataset

Two files are required as follows:

xxx/xxx.jpg 18.19,6.32,424.13,421.83,20 323.86,2.65,640.0,421.94,20

xxx/xxx.jpg 48,240,195,371,11 8,12,352,498,14

# image_path x_min, y_min, x_max, y_max, class_id x_min, y_min ,..., class_id

# make sure that x_max < width and y_max < height

person

bicycle

car

...

toothbrush

2.1 Train on VOC dataset

Download VOC PASCAL trainval and test data

$ wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tar

$ wget http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar

$ wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tar

Extract all of these tars into one directory and rename them, which should have the following basic structure.

VOC # path: /home/yang/dataset/VOC

├── test

| └──VOCdevkit

| └──VOC2007 (from VOCtest_06-Nov-2007.tar)

└── train

└──VOCdevkit

└──VOC2007 (from VOCtrainval_06-Nov-2007.tar)

└──VOC2012 (from VOCtrainval_11-May-2012.tar)

$ python scripts/voc_annotation.py --data_path /home/yang/test/VOC

Then edit your ./core/config.py to make some necessary configurations

__C.YOLO.CLASSES = "./data/classes/voc.names"

__C.TRAIN.ANNOT_PATH = "./data/dataset/voc_train.txt"

__C.TEST.ANNOT_PATH = "./data/dataset/voc_test.txt"

Here are two kinds of training method:

(1) train from scratch:

$ python train.py

$ tensorboard --logdir ./data

(2) train from COCO weights(recommend):

$ cd checkpoint

$ wget https://github.com/YunYang1994/tensorflow-yolov3/releases/download/v1.0/yolov3_coco.tar.gz

$ tar -xvf yolov3_coco.tar.gz

$ cd ..

$ python convert_weight.py --train_from_coco

$ python train.py

2.2 Evaluate on VOC dataset

$ python evaluate.py

$ cd mAP

$ python main.py -na

the mAP on the VOC2012 dataset:

part 3. Other Implementations

-YOLOv3目标检测有了TensorFlow实现,可用自己的数据来训练

- Implementing YOLO v3 in Tensorflow (TF-Slim)