MMdnn

MMdnn

MMdnn is a comprehensive and cross-framework tool to convert, visualize and diagnose deep learning (DL) models. The "MM" stands for model management, and "dnn" is the acronym of deep neural network.

Major features include:

-

- We implement a universal converter to convert DL models between frameworks, which means you can train a model with one framework and deploy it with another.

-

Model Retraining

- During the model conversion, we generate some code snippets to simplify later retraining or inference.

-

Model Search & Visualization

- We provide a model collection to help you find some popular models.

- We provide a model visualizer to display the network architecture more intuitively.

-

Model Deployment

Related Projects

Targeting at openness and advancing state-of-art technology, Microsoft Research (MSR) and Microsoft Software Technology Center (STC) had also released few other open source projects:

- OpenPAI : an open source platform that provides complete AI model training and resource management capabilities, it is easy to extend and supports on-premise, cloud and hybrid environments in various scale.

- FrameworkController : an open source general-purpose Kubernetes Pod Controller that orchestrate all kinds of applications on Kubernetes by a single controller.

- NNI : a lightweight but powerful toolkit to help users automate Feature Engineering, Neural Architecture Search, Hyperparameter Tuning and Model Compression.

- NeuronBlocks : an NLP deep learning modeling toolkit that helps engineers to build DNN models like playing Lego. The main goal of this toolkit is to minimize developing cost for NLP deep neural network model building, including both training and inference stages.

- SPTAG : Space Partition Tree And Graph (SPTAG) is an open source library for large scale vector approximate nearest neighbor search scenario.

We encourage researchers, developers and students to leverage these projects to boost their AI / Deep Learning productivity.

Installation

Install manually

You can get a stable version of MMdnn by

pip install mmdnn

And make sure to have Python installed or you can try the newest version by

pip install -U git+https://github.com/Microsoft/[email protected]

Install with docker image

MMdnn provides a docker image, which packages MMdnn and Deep Learning frameworks that we support as well as other dependencies. You can easily try the image with the following steps:

-

Install Docker Community Edition(CE)

-

Pull MMdnn docker image

docker pull mmdnn/mmdnn:cpu.small

-

Run image in an interactive mode

docker run -it mmdnn/mmdnn:cpu.small

Features

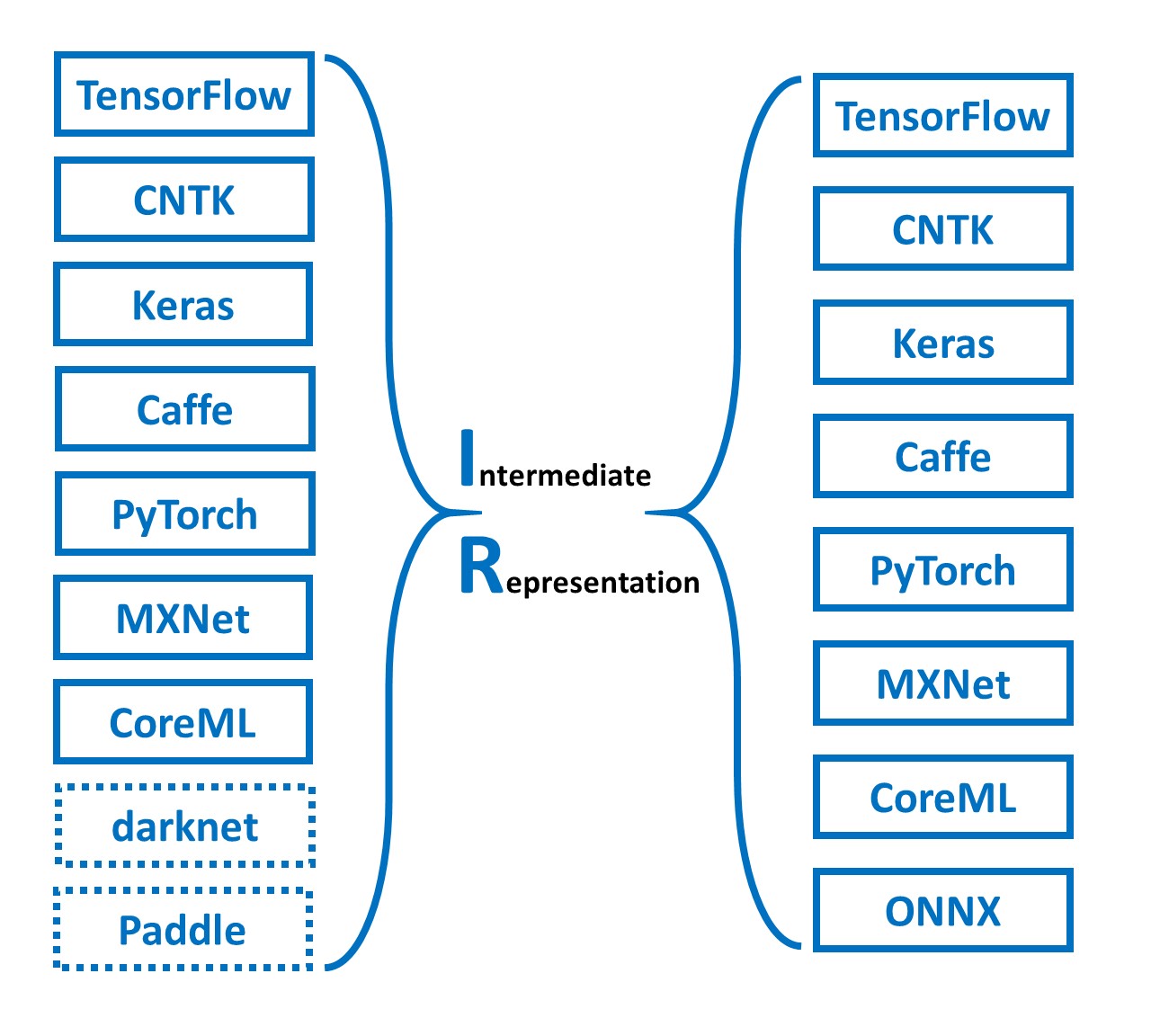

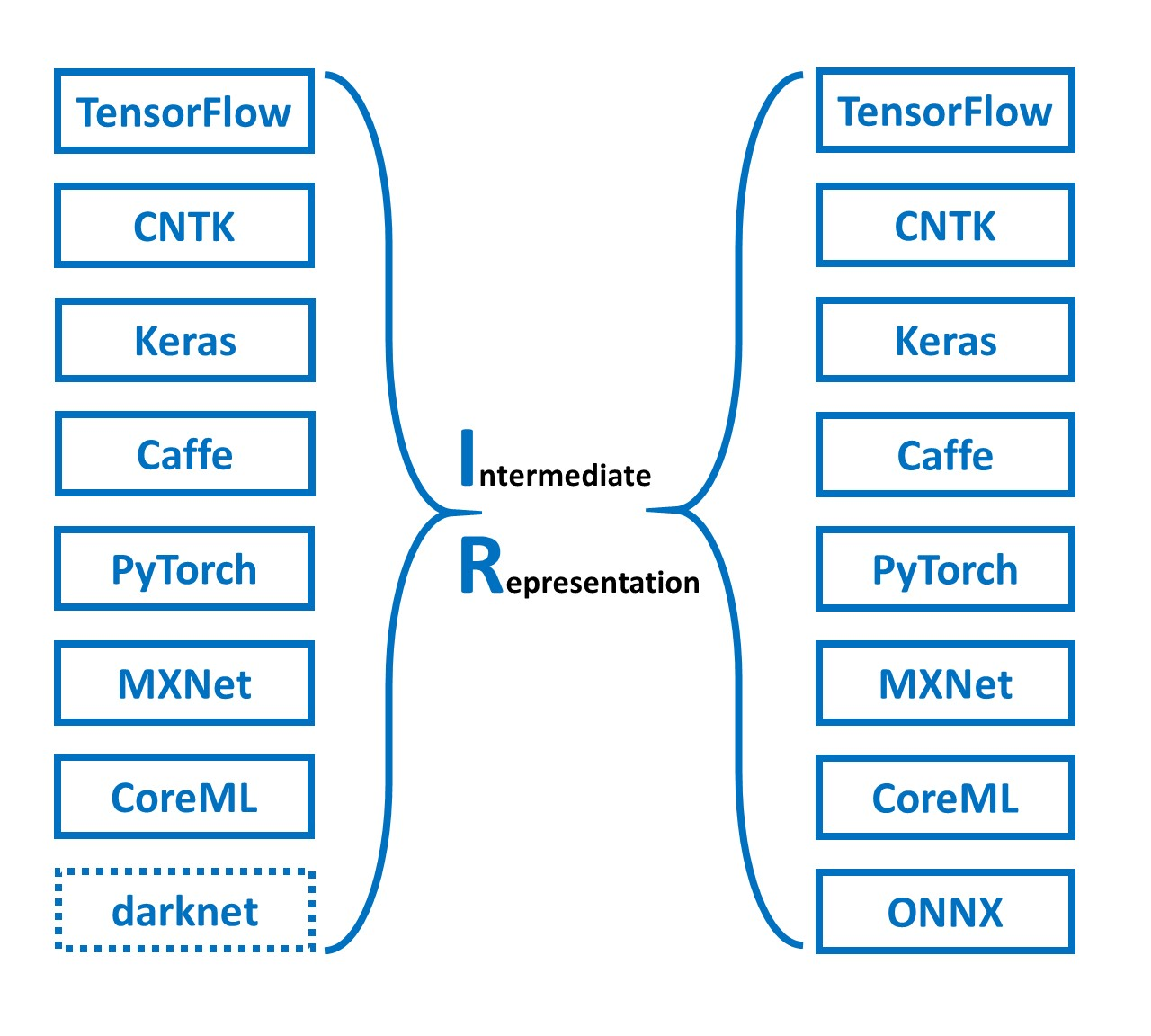

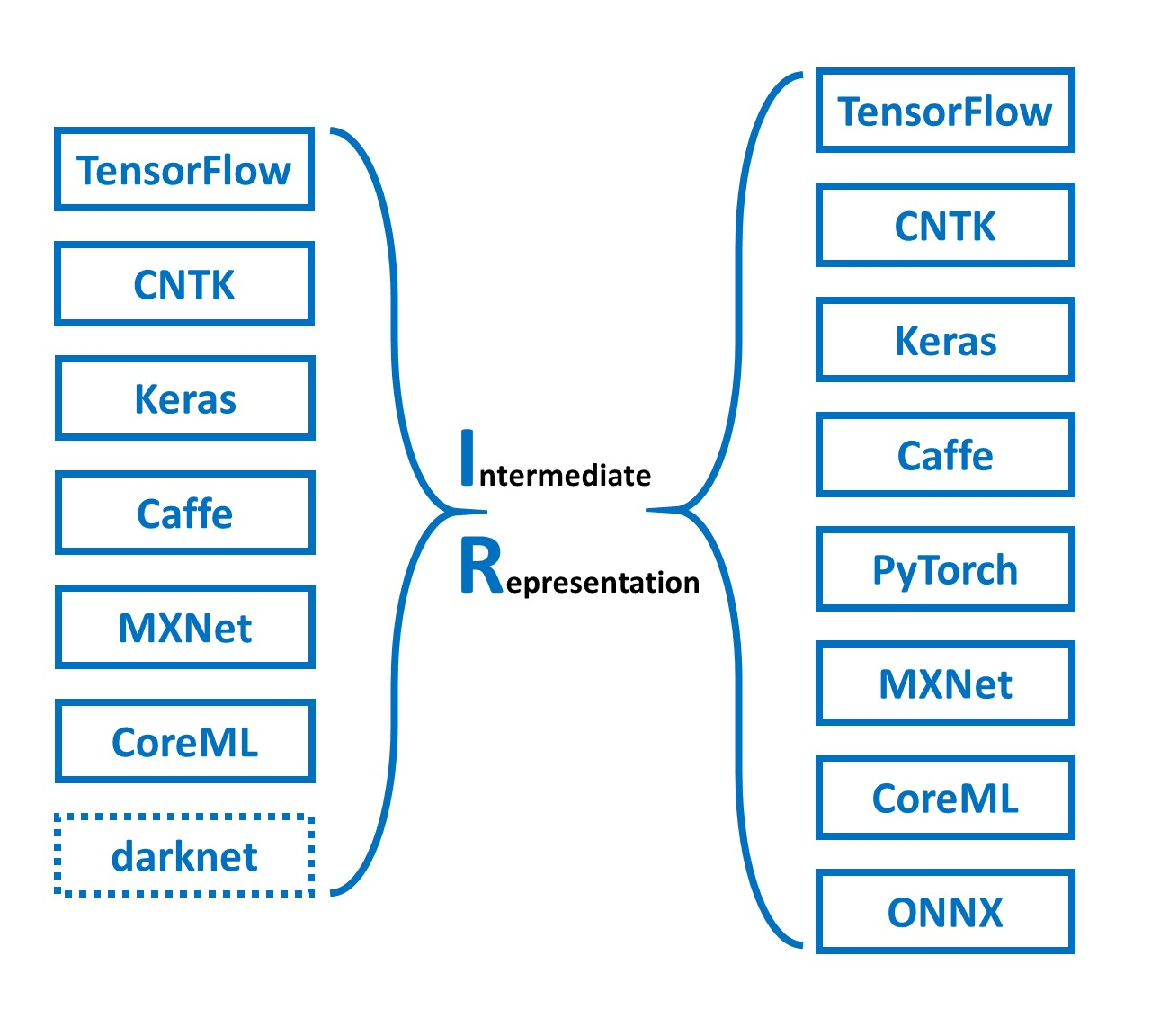

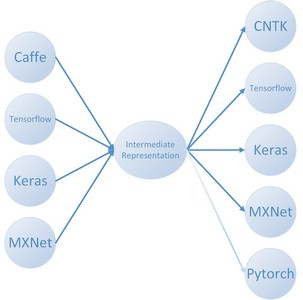

Model Conversion

Across the industry and academia, there are a number of existing frameworks available for developers and researchers to design a model, where each framework has its own network structure definition and saving model format. The gaps between frameworks impede the inter-operation of the models.

We provide a model converter to help developers convert models between frameworks through an intermediate representation format.

Support frameworks

[Note] You can click the links to get detailed README of each framework.

- Caffe

- Microsoft Cognitive Toolkit (CNTK)

- CoreML

- Keras

- MXNet

- ONNX (Destination only)

- PyTorch

- TensorFlow (Experimental) (We highly recommend you read the README of TensorFlow first)

- DarkNet (Source only, Experiment)

Tested models

The model conversion between currently supported frameworks is tested on some ImageNet models.

| Models | Caffe | Keras | TensorFlow | CNTK | MXNet | PyTorch | CoreML | ONNX |

|---|---|---|---|---|---|---|---|---|

| VGG 19 | √ | √ | √ | √ | √ | √ | √ | √ |

| Inception V1 | √ | √ | √ | √ | √ | √ | √ | √ |

| Inception V3 | √ | √ | √ | √ | √ | √ | √ | √ |

| Inception V4 | √ | √ | √ | o | √ | √ | √ | √ |

| ResNet V1 | × | √ | √ | o | √ | √ | √ | √ |

| ResNet V2 | √ | √ | √ | √ | √ | √ | √ | √ |

| MobileNet V1 | × | √ | √ | o | √ | √ | √ | √ |

| MobileNet V2 | × | √ | √ | o | √ | √ | √ | √ |

| Xception | √ | √ | √ | o | × | √ | √ | √ |

| SqueezeNet | √ | √ | √ | √ | √ | √ | √ | √ |

| DenseNet | √ | √ | √ | √ | √ | √ | √ | √ |

| NASNet | x | √ | √ | o | √ | √ | √ | x |

| ResNext | √ | √ | √ | √ | √ | √ | √ | √ |

| voc FCN | √ | √ | ||||||

| Yolo3 | √ | √ |

Usage

One command to achieve the conversion. Using TensorFlow ResNet V2 152 to PyTorch as our example.

$ mmdownload -f tensorflow -n resnet_v2_152 -o ./

$ mmconvert -sf tensorflow -in imagenet_resnet_v2_152.ckpt.meta -iw imagenet_resnet_v2_152.ckpt --dstNodeName MMdnn_Output -df pytorch -om tf_resnet_to_pth.pth

Done.

On-going frameworks

- Torch7 (help wanted)

- Chainer (help wanted)

On-going Models

- Face Detection

- Semantic Segmentation

- Image Style Transfer

- Object Detection

- RNN

Model Visualization

We provide a local visualizer to display the network architecture of a deep learning model. Please refer to the instruction.

Examples

Official Tutorial

Users' Examples

Contributing

Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to and actually do, grant us the rights to use your contribution. For details, visit https://cla.microsoft.com.

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

Intermediate Representation

The intermediate representation stores the network architecture in protobuf binary and pre-trained weights in NumPy native format.

[Note!] Currently the IR weights data is in NHWC (channel last) format.

Details are in ops.txt and graph.proto. New operators and any comments are welcome.

Frameworks

We are working on other frameworks conversion and visualization, such as PyTorch, CoreML and so on. We're investigating more RNN related operators. Any contributions and suggestions are welcome! Details in Contribution Guideline.

Authors

Yu Liu (Peking University): Project Developer & Maintainer

Cheng CHEN (Microsoft Research Asia): Caffe, CNTK, CoreML Emitter, Keras, MXNet, TensorFlow

Jiahao YAO (Peking University): CoreML, MXNet Emitter, PyTorch Parser; HomePage

Ru ZHANG (Chinese Academy of Sciences): CoreML Emitter, DarkNet Parser, Keras, TensorFlow frozen graph Parser; Yolo and SSD models; Tests

Yuhao ZHOU (Shanghai Jiao Tong University): MXNet

Tingting QIN (Microsoft Research Asia): Caffe Emitter

Tong ZHAN (Microsoft): ONNX Emitter

Qianwen WANG (Hong Kong University of Science and Technology): Visualization

Acknowledgements

Thanks to Saumitro Dasgupta, the initial code of caffe -> IR converting is references to his project caffe-tensorflow.

License

Licensed under the MIT license.