Hi all,

while trying to run a spacy test

import spacy

import crosslingual_coreference

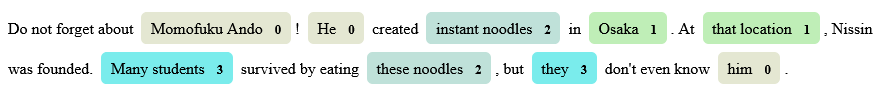

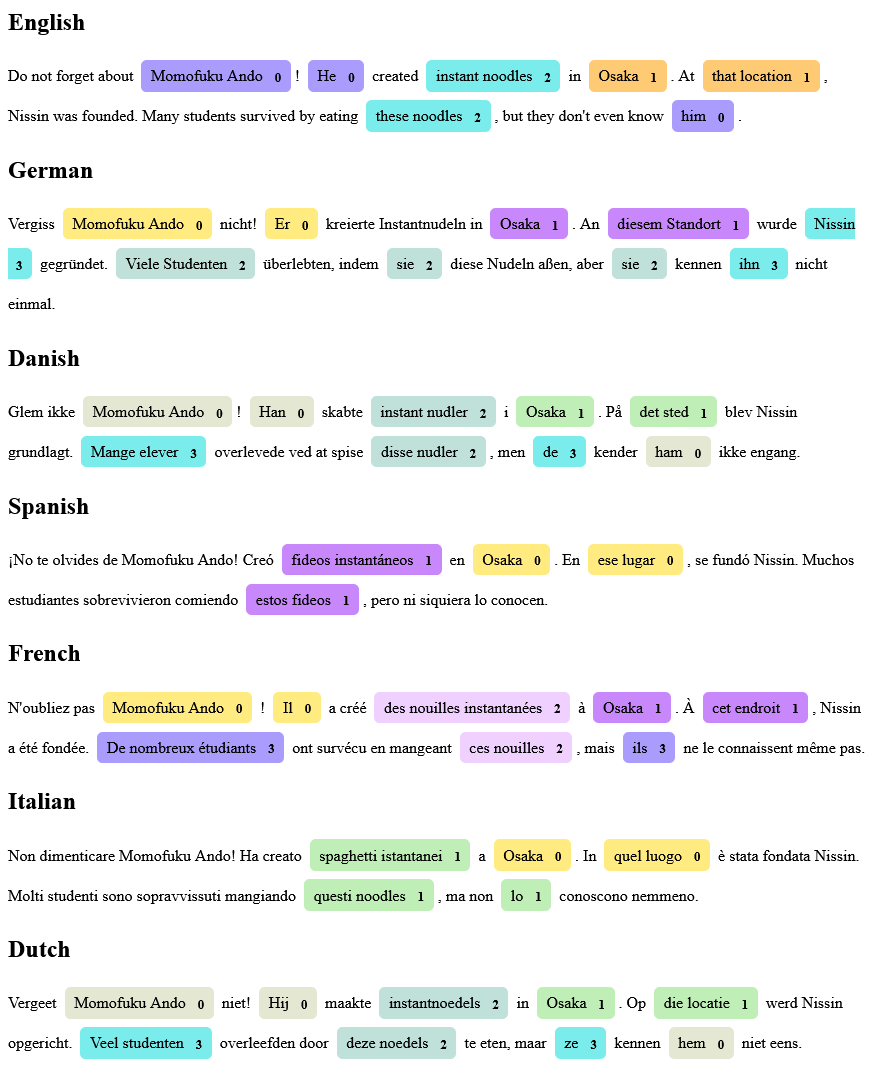

text = """

Do not forget about Momofuku Ando!

He created instant noodles in Osaka.

At that location, Nissin was founded.

Many students survived by eating these noodles, but they don't even know him."""

# use any model that has internal spacy embeddings

nlp = spacy.load('en_core_web_sm')

nlp.add_pipe(

"xx_coref", config={"chunk_size": 2500, "chunk_overlap": 2, "device": 0})

doc = nlp(text)

print(doc._.coref_clusters)

print(doc._.resolved_text)

I encountered the following issue:

[nltk_data] Downloading package omw-1.4 to

[nltk_data] /home/user/nltk_data...

[nltk_data] Package omw-1.4 is already up-to-date!

Traceback (most recent call last):

File "/home/user/test_coref/test.py", line 12, in <module>

nlp.add_pipe(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/spacy/language.py", line 792, in add_pipe

pipe_component = self.create_pipe(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/spacy/language.py", line 674, in create_pipe

resolved = registry.resolve(cfg, validate=validate)

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/thinc/config.py", line 746, in resolve

resolved, _ = cls._make(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/thinc/config.py", line 795, in _make

filled, _, resolved = cls._fill(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/thinc/config.py", line 867, in _fill

getter_result = getter(*args, **kwargs)

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/crosslingual_coreference/__init__.py", line 33, in make_crosslingual_coreference

return SpacyPredictor(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/crosslingual_coreference/CrossLingualPredictorSpacy.py", line 18, in __init__

super().__init__(language, device, model_name, chunk_size, chunk_overlap)

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/crosslingual_coreference/CrossLingualPredictor.py", line 55, in __init__

self.set_coref_model()

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/crosslingual_coreference/CrossLingualPredictor.py", line 85, in set_coref_model

self.predictor = Predictor.from_path(self.filename, language=self.language, cuda_device=self.device)

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/predictors/predictor.py", line 366, in from_path

load_archive(archive_path, cuda_device=cuda_device, overrides=overrides),

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/models/archival.py", line 232, in load_archive

dataset_reader, validation_dataset_reader = _load_dataset_readers(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/models/archival.py", line 268, in _load_dataset_readers

dataset_reader = DatasetReader.from_params(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/common/from_params.py", line 604, in from_params

return retyped_subclass.from_params(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/common/from_params.py", line 636, in from_params

kwargs = create_kwargs(constructor_to_inspect, cls, params, **extras)

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/common/from_params.py", line 206, in create_kwargs

constructed_arg = pop_and_construct_arg(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/common/from_params.py", line 314, in pop_and_construct_arg

return construct_arg(class_name, name, popped_params, annotation, default, **extras)

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/common/from_params.py", line 394, in construct_arg

value_dict[key] = construct_arg(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/common/from_params.py", line 348, in construct_arg

result = annotation.from_params(params=popped_params, **subextras)

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/common/from_params.py", line 604, in from_params

return retyped_subclass.from_params(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/common/from_params.py", line 638, in from_params

return constructor_to_call(**kwargs) # type: ignore

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/data/token_indexers/pretrained_transformer_mismatched_indexer.py", line 58, in __init__

self._matched_indexer = PretrainedTransformerIndexer(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/data/token_indexers/pretrained_transformer_indexer.py", line 56, in __init__

self._allennlp_tokenizer = PretrainedTransformerTokenizer(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/data/tokenizers/pretrained_transformer_tokenizer.py", line 72, in __init__

self.tokenizer = cached_transformers.get_tokenizer(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/allennlp/common/cached_transformers.py", line 204, in get_tokenizer

tokenizer = transformers.AutoTokenizer.from_pretrained(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/transformers/models/auto/tokenization_auto.py", line 546, in from_pretrained

return tokenizer_class_fast.from_pretrained(pretrained_model_name_or_path, *inputs, **kwargs)

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/transformers/tokenization_utils_base.py", line 1788, in from_pretrained

return cls._from_pretrained(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/transformers/tokenization_utils_base.py", line 1923, in _from_pretrained

tokenizer = cls(*init_inputs, **init_kwargs)

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/transformers/models/xlm_roberta/tokenization_xlm_roberta_fast.py", line 140, in __init__

super().__init__(

File "/home/user/test_coref/.venv/lib/python3.9/site-packages/transformers/tokenization_utils_fast.py", line 110, in __init__

fast_tokenizer = TokenizerFast.from_file(fast_tokenizer_file)

Exception: EOF while parsing a list at line 1 column 4920583

Here's what I have installed (pulled by poetry add crosslingual-coreference or pip install crosslingual-coreference):

(.venv) [email protected]$ pip freeze

aiohttp==3.8.1

aiosignal==1.2.0

allennlp==2.9.3

allennlp-models==2.9.3

async-timeout==4.0.2

attrs==21.4.0

base58==2.1.1

blis==0.7.7

boto3==1.23.5

botocore==1.26.5

cached-path==1.1.2

cachetools==5.1.0

catalogue==2.0.7

certifi==2022.5.18.1

charset-normalizer==2.0.12

click==8.0.4

conllu==4.4.1

crosslingual-coreference==0.2.4

cymem==2.0.6

datasets==2.2.1

dill==0.3.5.1

docker-pycreds==0.4.0

en-core-web-sm @ https://github.com/explosion/spacy-models/releases/download/en_core_web_sm-3.2.0/en_core_web_sm-3.2.0-py3-none-any.whl

en-core-web-trf @ https://github.com/explosion/spacy-models/releases/download/en_core_web_trf-3.2.0/en_core_web_trf-3.2.0-py3-none-any.whl

fairscale==0.4.6

filelock==3.6.0

frozenlist==1.3.0

fsspec==2022.5.0

ftfy==6.1.1

gitdb==4.0.9

GitPython==3.1.27

google-api-core==2.8.0

google-auth==2.6.6

google-cloud-core==2.3.0

google-cloud-storage==2.3.0

google-crc32c==1.3.0

google-resumable-media==2.3.3

googleapis-common-protos==1.56.1

h5py==3.6.0

huggingface-hub==0.5.1

idna==3.3

iniconfig==1.1.1

Jinja2==3.1.2

jmespath==1.0.0

joblib==1.1.0

jsonnet==0.18.0

langcodes==3.3.0

lmdb==1.3.0

MarkupSafe==2.1.1

more-itertools==8.13.0

multidict==6.0.2

multiprocess==0.70.12.2

murmurhash==1.0.7

nltk==3.7

numpy==1.22.4

packaging==21.3

pandas==1.4.2

pathtools==0.1.2

pathy==0.6.1

Pillow==9.1.1

pluggy==1.0.0

preshed==3.0.6

promise==2.3

protobuf==3.20.1

psutil==5.9.1

py==1.11.0

py-rouge==1.1

pyarrow==8.0.0

pyasn1==0.4.8

pyasn1-modules==0.2.8

pydantic==1.8.2

pyparsing==3.0.9

pytest==7.1.2

python-dateutil==2.8.2

pytz==2022.1

PyYAML==6.0

regex==2022.4.24

requests==2.27.1

responses==0.18.0

rsa==4.8

s3transfer==0.5.2

sacremoses==0.0.53

scikit-learn==1.1.1

scipy==1.6.1

sentence-transformers==2.2.0

sentencepiece==0.1.96

sentry-sdk==1.5.12

setproctitle==1.2.3

shortuuid==1.0.9

six==1.16.0

smart-open==5.2.1

smmap==5.0.0

spacy==3.2.4

spacy-alignments==0.8.5

spacy-legacy==3.0.9

spacy-loggers==1.0.2

spacy-sentence-bert==0.1.2

spacy-transformers==1.1.5

srsly==2.4.3

tensorboardX==2.5

termcolor==1.1.0

thinc==8.0.16

threadpoolctl==3.1.0

tokenizers==0.12.1

tomli==2.0.1

torch==1.10.2

torchaudio==0.10.2

torchvision==0.11.3

tqdm==4.64.0

transformers==4.17.0

typer==0.4.1

typing-extensions==4.2.0

urllib3==1.26.9

wandb==0.12.16

wasabi==0.9.1

wcwidth==0.2.5

word2number==1.1

xxhash==3.0.0

yarl==1.7.2

Do you have any recommendations?

Is there an installation step missing?

Thanks in advance!