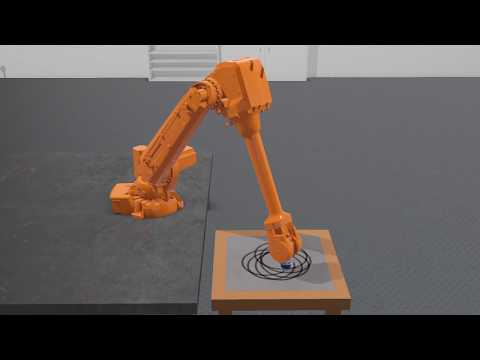

Hi, I try to conduct to draw a straight line by calculating inverse kinematics with niryo one robot.

input position (x : 0.073, y : 0.001, z : 0.168)

input orientation (roll : 0.029, yaw: 1.407, pitch: 0.204)

Answer of joints 1 ~ 6 axis : [ -0.007, 0.129, -1.360, 0.189, -0.183, -0.360]

I use blow's model.urdf code.

Then, calculate inverse kinematics using these code lines.

Do you know any solution to solve this problem?

from ikpy.chain import Chain

my_chain = Chain.from_urdf_file("model.urdf")

print(my_chain)

ik = my_chain.inverse_kinematics(target_position=[0.083, 0.001, 0.168], target_orientation=[0.029, 1.407, 0.203],orientation_mode="all")

print(ik)

print(my_chain.forward_kinematics(ik)[:3, 3])

print(my_chain.forward_kinematics(ik))

I think the calculation of inverse kinematics is bad.

The first line is correct, although the second line is wrong.

print(my_chain.forward_kinematics([0, 0.030, 0.152, -1.334, -0.184, -0.029, -0.030, 0])[:3, 3])

print(my_chain.inverse_kinematics(target_position=my_chain.forward_kinematics([0, 0.030, 0.152, -1.334, -0.184, -0.029, -0.030, 0])[:3, 3]))

<?xml version="1.0" ?>

<!-- =================================================================================== -->

<!-- | This document was autogenerated by xacro from niryo_one.urdf.xacro | -->

<!-- | EDITING THIS FILE BY HAND IS NOT RECOMMENDED | -->

<!-- =================================================================================== -->

<!--

niryo_one.urdf.xacro

Copyright (C) 2017 Niryo

All rights reserved.

This program is free software: you can redistribute it and/or modify

it under the terms of the GNU General Public License as published by

the Free Software Foundation, either version 3 of the License, or

(at your option) any later version.

This program is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

GNU General Public License for more details.

You should have received a copy of the GNU General Public License

along with this program. If not, see <http://www.gnu.org/licenses/>.

-->

<robot name="niryo_one" xmlns:xacro="http://www.ros.org/wiki/xacro">

<!-- Properties -->

<!-- Links -->

<link name="world"/>

<link name="base_link">

<visual>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/collada/base_link.dae"/>

</geometry>

</visual>

<collision>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/stl/base_link.stl"/>

</geometry>

</collision>

</link>

<link name="shoulder_link">

<visual>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/collada/shoulder_link.dae"/>

</geometry>

</visual>

<collision>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/stl/shoulder_link.stl"/>

</geometry>

</collision>

</link>

<link name="arm_link">

<visual>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/collada/arm_link.dae"/>

</geometry>

</visual>

<collision>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/stl/arm_link.stl"/>

</geometry>

</collision>

</link>

<link name="elbow_link">

<visual>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/collada/elbow_link.dae"/>

</geometry>

</visual>

<collision>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/stl/elbow_link.stl"/>

</geometry>

</collision>

</link>

<link name="forearm_link">

<visual>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/collada/forearm_link.dae"/>

</geometry>

</visual>

<collision>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/stl/forearm_link.stl"/>

</geometry>

</collision>

</link>

<link name="wrist_link">

<visual>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/collada/wrist_link.dae"/>

</geometry>

</visual>

<collision>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/stl/wrist_link.stl"/>

</geometry>

</collision>

</link>

<link name="hand_link">

<visual>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/collada/hand_link.dae"/>

</geometry>

</visual>

<collision>

<origin rpy="0 0 0" xyz="0 0 0"/>

<geometry>

<mesh filename="package://niryo_one_description/meshes/v2/stl/hand_link.stl"/>

</geometry>

</collision>

</link>

<link name="tool_link">

</link>

<!--Joints -->

<joint name="joint_world" type="fixed">

<parent link="world"/>

<child link="base_link"/>

<origin rpy="0 0 0" xyz="0 0 0"/>

</joint>

<joint name="joint_1" type="revolute">

<parent link="base_link"/>

<child link="shoulder_link"/>

<origin rpy="0 0 0" xyz="0 0 0.103"/>

<axis xyz="0 0 1"/>

<limit effort="1" lower="-3.05433" upper="3.05433" velocity="1.0"/>

</joint>

<joint name="joint_2" type="revolute">

<parent link="shoulder_link"/>

<child link="arm_link"/>

<origin rpy="1.5707963268 -1.5707963268 0" xyz="0 0 0.08"/>

<limit effort="1" lower="-1.5708" upper="0.640187" velocity="1.0"/>

<axis xyz="0 0 1"/>

</joint>

<joint name="joint_3" type="revolute">

<parent link="arm_link"/>

<child link="elbow_link"/>

<origin rpy="0 0 -1.5707963268" xyz="0.21 0.0 0"/>

<limit effort="1" lower="-1.397485" upper="1.5707963268" velocity="1.0"/>

<axis xyz="0 0 1"/>

</joint>

<joint name="joint_4" type="revolute">

<parent link="elbow_link"/>

<child link="forearm_link"/>

<origin rpy="0 1.5707963268 0" xyz="0.0415 0.03 0"/>

<limit effort="1" lower="-3.05433" upper="3.05433" velocity="1.0"/>

<axis xyz="0 0 1"/>

</joint>

<joint name="joint_5" type="revolute">

<parent link="forearm_link"/>

<child link="wrist_link"/>

<origin rpy="0 -1.5707963268 0" xyz="0 0 0.18"/>

<limit effort="1" lower="-1.74533" upper="1.91986" velocity="1.0"/>

<axis xyz="0 0 1"/>

</joint>

<joint name="joint_6" type="revolute">

<parent link="wrist_link"/>

<child link="hand_link"/>

<origin rpy="0 1.5707963268 0" xyz="0.0164 -0.0055 0"/>

<limit effort="1" lower="-2.57436" upper="2.57436" velocity="1.0"/>

<axis xyz="0 0 1"/>

</joint>

<joint name="hand_tool_joint" type="fixed">

<parent link="hand_link"/>

<child link="tool_link"/>

<origin rpy="-1.5707963268 -1.5707963268 0" xyz="0 0 0.0073"/>

</joint>

</robot>

pinned robot-related